By Deyan Nikolic

On Thursday 14th August Newington had the honour of having Professor Toby Walsh talk in the OBLT about the AI singularity and what the future of AI might look like. Prof. Toby Walsh is one of the leading and most celebrated AI researchers out there having written several books on the topic, received an M.A. degree in theoretical physics, and gave a Ted Talk on the use of autonomous weapons in war. Hence, he has heavily criticized Russia’s use of drones and other autonomous devices in the war, resulting in him being banned from ever visiting the country.

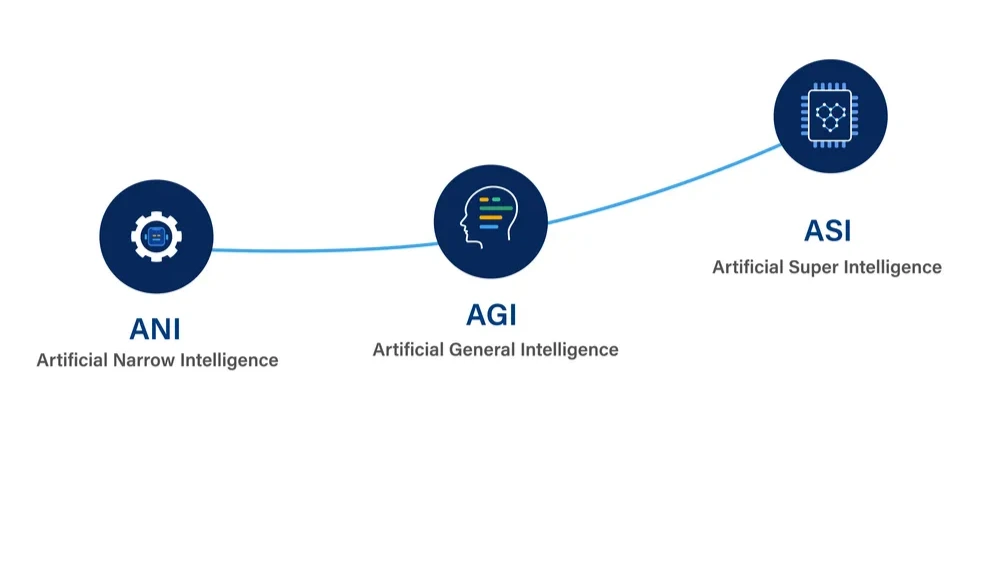

What is AI, AGI, and ASI?

To start off the night the professor discussed AI, AGI (Artificial General Intelligence), and ASI (Artificial Super Intelligence), and more specifically what are they, and what do they mean for the future. The current ChatGPT AI would fall into the category of AI, where the AI isn’t quite as smart as an expert in the field but probably smarter than the average human. The second level is AGI, which is where the AI is just below the level of the best human or equal with the smartest human in the field. The last, ASI, is when the AI surpass human intelligence in all aspects, this is seen as the ultimate goal for AI.

Objections For Why We Can’t Achieve AGI/ASI:

But is ASI actually achievable? There are many objections for why ASI would not be possible, the first of these is mind vs computation. The current centres that power the AIs such as ChatGPT take up an extreme amount of space and cost millions, if not billions to make because of all the Nvidia GPUs. On the other hand, a human brain takes up next to no space but is currently more intelligent than an AI, raising the point that to create AGI or ASI we would need far more GPUs and other parts needed for the creation of these AIs. Prof. Toby Walsh then brought up the thought experiment of the Chinese room, thought up by philosopher John Searle, the basic idea goes like this: There is a man isolated in a room who does not understand Chinese; however, they have a detailed book for manipulation Chinese symbols. Someone then slips questions on notes into the room which the person inside uses the book to reply to, not understanding what they are doing. But when the person inside hands the question back to the man outside the room it seems as though the question has been answered, yet the man inside had no understanding of Chinese. These are but a couple of reasons for why people believe AGI and ASI can’t be achieved, however in spite of these reasons it is still very possible that we will achieve AGI/ASI in the foreseeable future.

Work:

The impact that AI will have on work is on all of our minds, in the near future it is very possible that jobs such as assistants or other baseline jobs will be taken over by AI. Prof. Walsh brought this up; however, he approached it in a way that I hadn’t considered before. He drew links to the industrial revolution, over 100 years ago, where the use of machines and advocation for workers’ rights resulted in workers having a two-day weekend, giving them a break and more time with their families. Prof. Walsh believes that a similar thing will happen when AI starts to do some of our jobs, it will allow us to

have another day off doing what you enjoy. In response to the argument that people will still lose their jobs he pointed out that there are many jobs that can’t be replaced by AI and there is great need for, an example of this would be carers for the elderly. Overall, Prof. Walsh looked upon the impact of AI in this area under a very positive light which I haven’t heard before and I will be very interested to see how it turns out.

Warfare:

It seemed that whilst Prof. Walsh tried to put as positive a light as possible on AI he couldn’t in for one use of AI – war. He is so concerned about how AI will be used for autonomous warfare (drones and the like) that he put together an open letter that attracted 20,000 signatures that called for a ban on offensive autonomous weapons. The reason he is so concerned on this topic is that when machines start to do the killing for us there is not the same understanding of the impact and this can easily lead to out of hand situations, where it is drone vs drone and it becomes a war of who can use the most drones the fastest.

Is It the End of Humanity?

This might be the one question that no one wants the answer to, that the greatest creation humans could make ends up destroying us (this is discussed in a report called AI 2027 by several very knowledgeable AI experts). Prof. Walsh’s view on this was that it wouldn’t be the end, but it would certainly pose several difficulties, such as the spread of misinformation, and the power we would be giving it. He believes that it is not the intelligence that we should worry about but rather the power that we would be giving it over our live. We would be careful about what it might do with that power and how it might use it, whether for good or for destruction.

Positive Uses For AI:

To end on a positive note, prof. Walsh discussed how AI can be used to better our lives in so many ways. A few examples he used were the possibility for AI to one day be able to help cure diseases and help those that are in need. Another is that it will be able to accelerate the research of sciences and the creation of new technologies. So, what it really comes down to is, do the possible negatives outweigh the possible benefits.

Overall, Professor Toby Walsh believed that whilst there are definitely some negatives of AI the positives are too great and the chance that AI destroys humanity is not high enough to justify not using it. It was an amazing talk, and I would definitely recommend going to any future talks in the OBLT as they are great opportunities to learn about something you might not know much about.

Thanks for reading!